Artificial Intelligence (AI) has become one of the most talked-about technologies in recent years, particularly in the field of CYBERSECURITY. Promises of AI-driven solutions transforming the way we detect and respond to threats are everywhere. However, to truly understand the impact of AI in cybersecurity, we need to cut through the hype and confront the real challenges it presents.

Understanding AI: Separating Fact from Fiction

AI, at its core, is an algorithm designed to interact with data in a way that mimics intelligence. From generating human-like text to recognizing patterns in vast datasets, AI can produce outcomes that appear almost magical. However, it’s important to recognize that AI doesn’t think or reason like a human; it works through complex probability mappings based on previously encountered data. This distinction is crucial because it defines both the power and the limitations of AI in cybersecurity.

For example, language generation tools are impressive but are fundamentally based on predicting the next word or phrase in a sequence. They don’t truly understand the content they generate. Similarly, AI in cybersecurity can identify patterns and anomalies, but it doesn’t inherently understand the context or intent behind those patterns. This makes it essential to understand what AI can and cannot do, particularly in a field as critical as cybersecurity.

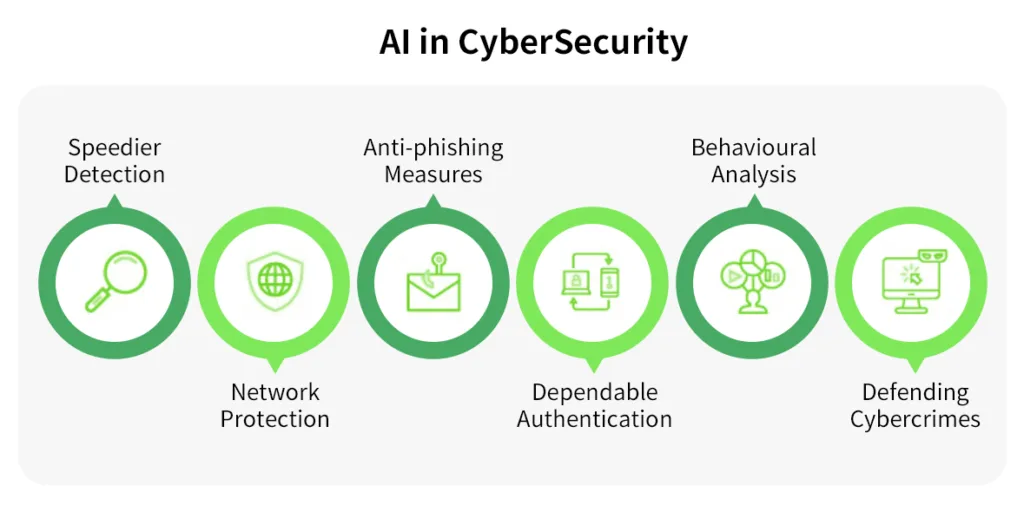

The Importance of AI in Cybersecurity

Despite its limitations, AI offers significant potential in cybersecurity. By applying probability mappings to datasets of malicious tools and behaviors, AI can help identify threats that might otherwise go unnoticed. The ability to detect patterns that indicate malicious activity, even in previously unseen events, is a powerful tool in the fight against cybercrime.

However, the effectiveness of AI in cybersecurity is not guaranteed. It relies heavily on the quality of the algorithms used and the data they are trained on. If an algorithm isn’t tuned to the right parameters or lacks sufficient high-quality data, it may miss threats or generate false positives. This makes the careful development and implementation of AI critical to its success.

Challenges on the Path to Successful AI Implementation

- Data and Algorithm Quality: AI is only as good as the algorithms and data that underpin it. Poorly designed algorithms or insufficient data can lead to inaccurate results, undermining the effectiveness of AI-driven cybersecurity tools.

- Obfuscation of AI Models: Some AI models operate as “black boxes,” where the internal workings are not transparent. This can create trust issues, as it becomes difficult to understand why certain decisions are made, potentially leading to missed threats or biased outcomes.

- Integration and Expertise: AI is not a plug-and-play solution. Successful implementation requires careful integration with existing security infrastructure and a deep understanding of how to interpret and act on AI-generated insights. Security teams must be trained to understand AI’s limitations and effectively incorporate its outputs into their broader security strategy.

Dispelling Myths Around AI in Cybersecurity

Several myths persist around AI in cybersecurity, clouding its potential:

- Myth 1: AI Automatically Enhances Any Tool: Not all AI algorithms are suited for every cybersecurity application. The wrong algorithm can lead to poor outcomes, emphasizing the need for carefully selecting the right tools for the job.

- Myth 2: One-Size-Fits-All Solutions: Different organizations have different needs. Understanding these needs and choosing the appropriate AI solution is critical to achieving the desired outcomes.

- Myth 3: AI Provides Unbreachable Security: AI is not immune to attacks. Just like any technology, it requires ongoing monitoring and adaptation to remain effective.

Considerations for Executives

When considering AI in a cybersecurity program, it’s important to approach it with a critical eye. A generic AI model may lack the necessary knowledge of the threat landscape and could require extensive training, which is both complex and resource-intensive. Executives should evaluate cybersecurity vendors carefully, ensuring they understand how AI algorithms work, how they are trained, and on what data. Without this understanding, the risks could outweigh the benefits.

Conclusion

AI has the potential to revolutionize cybersecurity, but its implementation must be approached with caution and expertise. By understanding both the opportunities and the challenges, organizations can leverage AI to enhance their security posture without falling prey to the hype. The key to success lies in choosing the right tools, ensuring transparency in AI models, and integrating AI with the existing security infrastructure in a way that maximizes its potential while minimizing risks.

About The Author

Sandesh Reddy

Sandesh is a Solutions Architect at ByteBridge with a strong background in security and networking. He specializes in Secure Access Service Edge (SASE) projects and holds certifications in CCNA, AWS, ZScaler, and Netskope. Sandesh is committed to driving innovation and delivering value-driven solutions to address the evolving needs of modern enterprises.